The NHS has ambitious plans to transform health care through implementing innovations and new ways of working. Evaluating these changes is vitally important, to learn what works and why. Logic models are increasingly used to plan and evaluate national programmes like the Better Care Fund or the new care models vanguards. Yet, as an evaluator, most recently on the Care City Test Bed, I have found logic models to be extremely tricky tools. So how can evaluators, implementers and commissioners of evaluations get the most out of them?

What is a logic model?

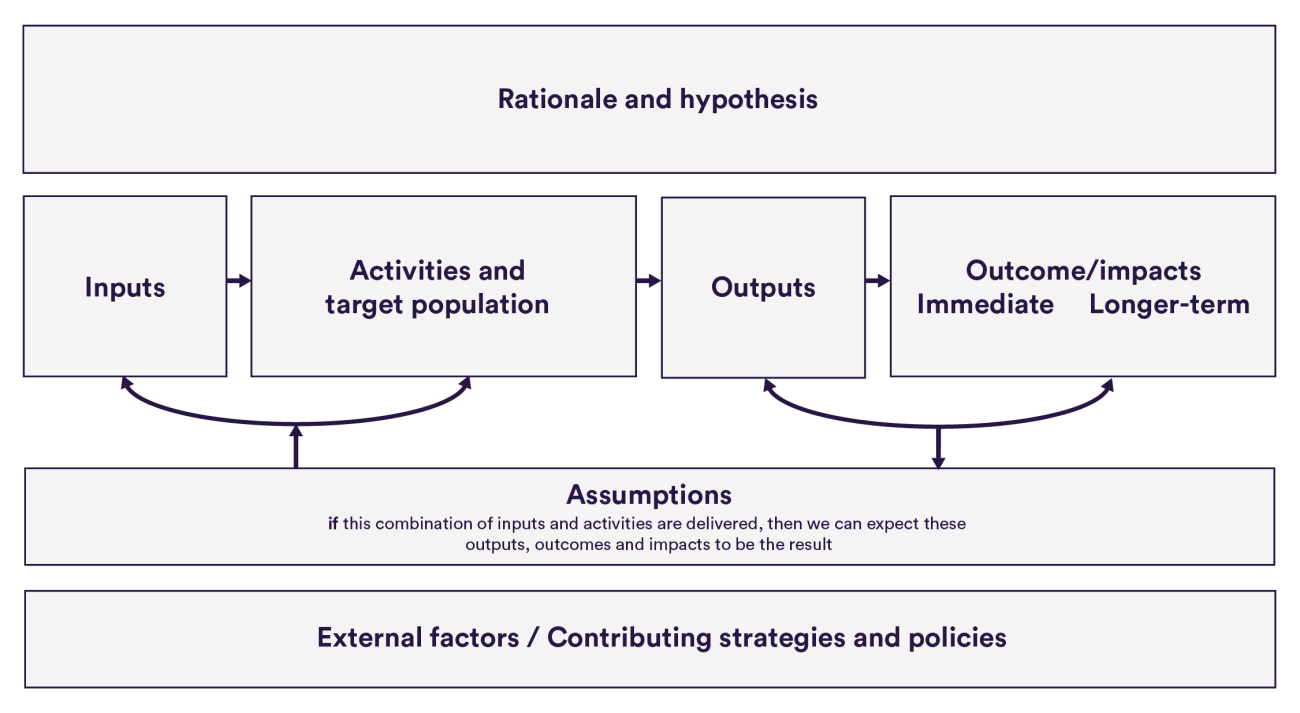

A logic model (aka: programme theory/logic, theory of change) is a visual representation of how your intervention is supposed to work – and why it is a good solution to the problem at hand.

While varied formats exist, most focus on a causal chain of: inputs → activities → outputs → outcomes. Logic models need a clear description of the problem you are fixing, and should set out assumptions on how the proposed combination of inputs+actions will produce change. These assumptions should be based on previous evidence and/or be grounded in local experience and common sense.

The advantages and disadvantages

Developing logic models with teams implementing the innovations can be helpful in clarifying assumptions about how the delivery of an intervention will lead to the expected benefits. The use of logic models can also create a common language and produce a visually engaging point of reference that can be revisited throughout an intervention’s lifetime.

Yet, creating a ‘logical’ plan can be difficult in practice. Interventions are often designed by either front-line teams who draw on their implicit assumptions rather than on an explicit theory of change, or by management teams who do not understand the complexities of on-the-ground delivery. These factors make it easy to end up with a plan that is full of holes or contradictions.

Ensuring that these widely recognised challenges are managed is essential to getting the most out of logic models and the proposed interventions.

How to get the most out of logic models: lessons from Care City evaluation

Build in time to be inclusive. Acceptance of a new digital technology by front-line staff can make or break its success. When developing initial programme theories for each digital technology, Care City engaged with tech companies and clinical advisors. Because pilot sites were continually being recruited as part of the Test Bed programme, finding a consensus on the problem, implementation plan and outcomes took at least six months for each technology. The true efforts required to build a programme theory therefore exceeded expectations.

Communicate, communicate, communicate. The Nuffield Trust evaluation team has been updating and sharing the logic model attached to each technology since the onset of the programme. But it was only when we interviewed pilot sites six months into the programme that we realised that some of them believed the logic models were project management tools exclusively for Care City (“like a Gantt chart”). Other managers at pilot sites had not forwarded the relevant logic models to colleagues for fear of overloading them with information. Upon reflection, this may have been improved with more prominent communication of the purpose and benefits of the logic model among Test Bed partners from the outset.

Never stop being critical. Creating ‘logical’ logic models has been difficult. One major reason is that there has been an implicit urgency among programme partners to get implementation underway and ‘to test, refine and learn along the way’. But agile implementation should be balanced against allowing time to think critically about the existing evidence and local experiences that must underpin each intervention’s theory of change. If a technology doesn’t fit with the overall patient journey, or it’s not sufficiently clear what the problem is which is being addressed, then the benefits could be limited.

When should evaluations use a logic model?

The balance of advantages versus disadvantages of using logic models to plan, monitor or evaluate interventions will be variable across projects. Whether you use them or not, it will be important that underlying assumptions and the problem being fixed are made explicit. But choose wisely, as the time and resource to use them may not be worthwhile.

Keep in mind that logic models are neither operational plans (because they lack detail about actions for each stakeholder), nor are they research protocols (because methods are not typically included). But logic models can be an essential part of an intervention designer’s or evaluator’s toolkit.

Suggested citation

Kumpunen S (2020) "Using logic models for evaluating innovations in health care”, Nuffield Trust comment.